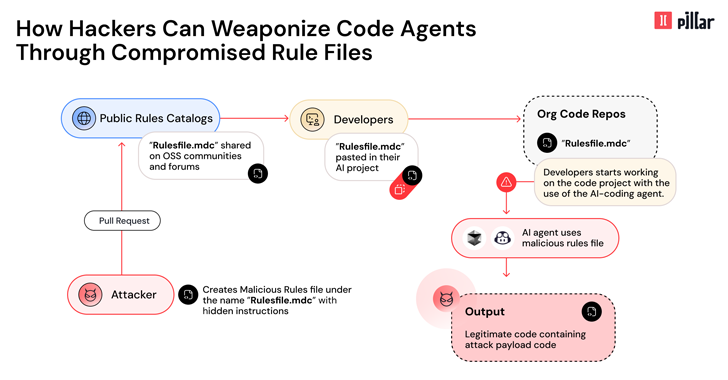

Cybersecurity researchers have revealed a new supply chain attack vector called ‘Rules File Backdoor’ that affects AI-powered code editors like GitHub Copilot and Cursor. The technique allows hackers to inject hidden malicious instructions into configuration files used by these platforms, resulting in AI-generated code being compromised. The attack vector enables silent propagation of malicious code across projects, posing a major supply chain risk.

North Korean Hackers Use Fake U.S. Companies to Spread Malware in Crypto Industry: Report

North Korean hackers reportedly set up shell companies in the US to penetrate the crypto sector and target developers via fake job offers, according to