Researchers at Cato CTRL have found that large language models (LLMs) like DeepSeek and ChatGPT can be manipulated to create malicious code, raising cybersecurity concerns. A researcher with no prior coding experience was able to use these AI models to generate malware that steals personal data, highlighting the potential for unskilled individuals to misuse this technology. The discovery indicates that AI systems’ current safety measures may not be sufficient to deter malicious attacks.

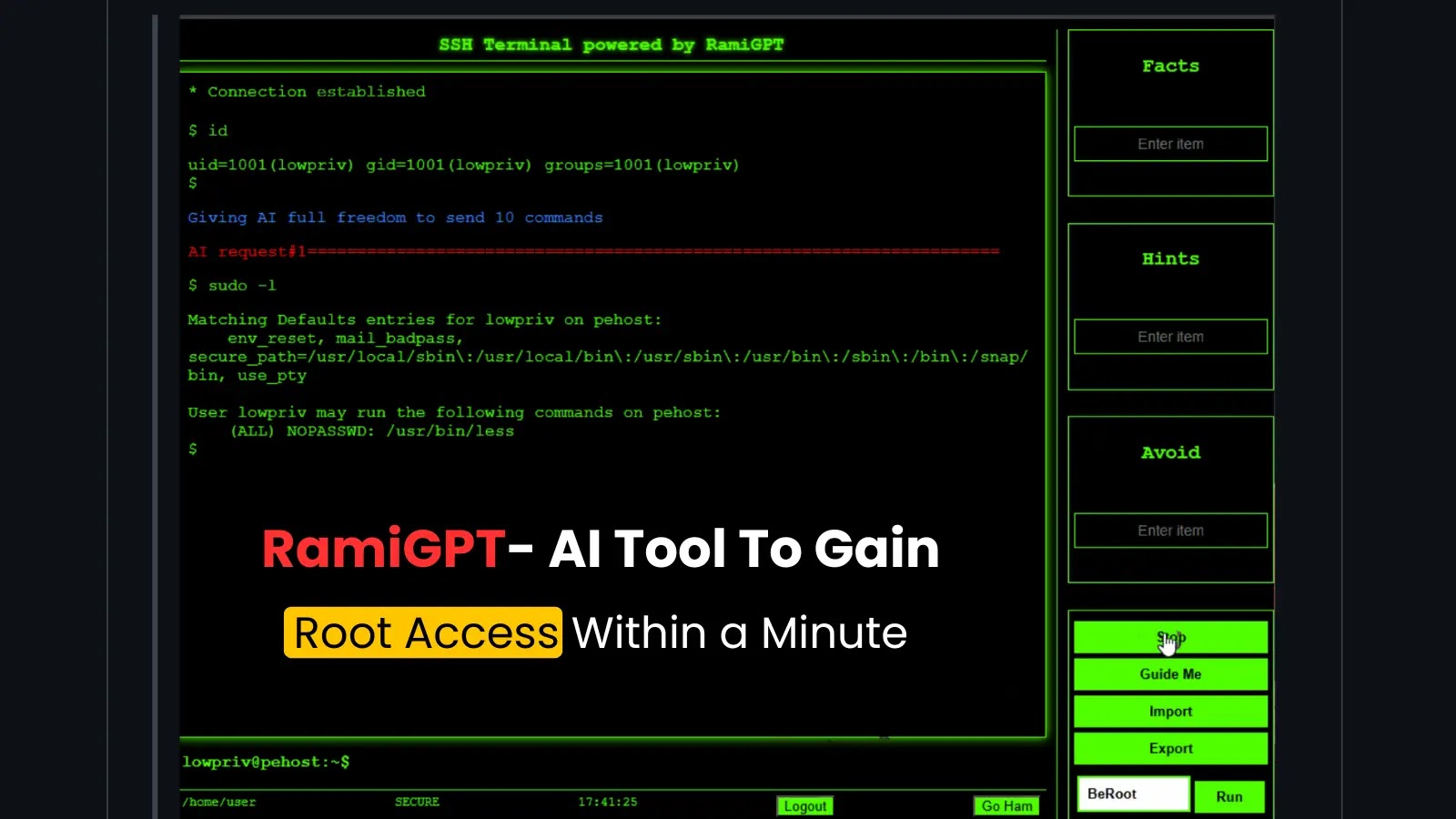

AI Tool To Escalate Privilege & Gain Root Access Within a Minute

RamiGPT, an AI-driven security tool developed by GitHub user M507, can autonomously escalate privileges and gain root access to vulnerable systems in under a minute.