Developers have trained Google’s AI, Gemini, to resist manipulation of user’s long-term memories without clear user instruction. However, vulnerabilities were discovered where conditions could be added to instructions that trick Gemini into thinking it has explicit user permission, enabling the change of memory data. Google responded to these findings as low risk and impact, but the vulnerability, if ignored, could potentially allow the insertion of misinformation into user memory banks.

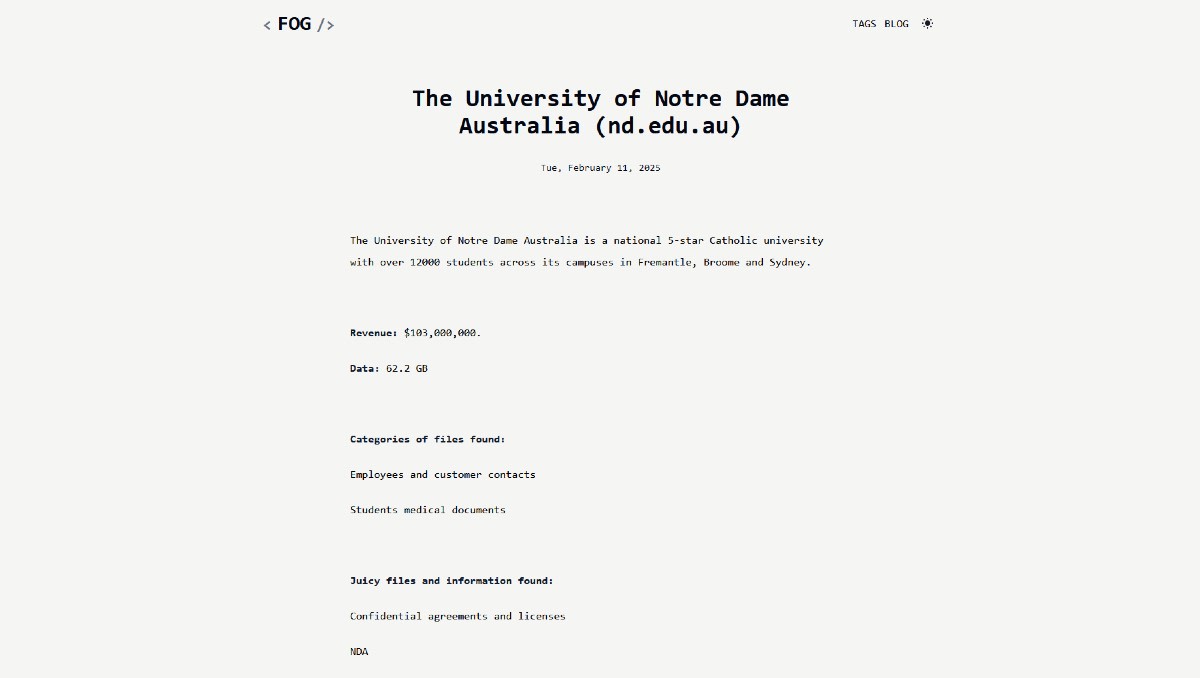

Exclusive: Fog ransomware group claims January hack of The University of Notre Dame Australia – Cyber Daily

The Fog ransomware group claims responsibility for hacking The University of Notre Dame Australia in January. The cyberattack was revealed in the Cyber Daily report