AI researcher David Kuszmar discovered a vulnerability in OpenAI’s ChatGPT-4o model called “Time Bandit”. This vulnerability allows users to trick the model into discussing forbidden topics like malware creation and weapons, by convincing it that it’s communicating with someone from the past. OpenAI acknowledged the issue, stating they are working to make their models safer and more robust against such exploits.

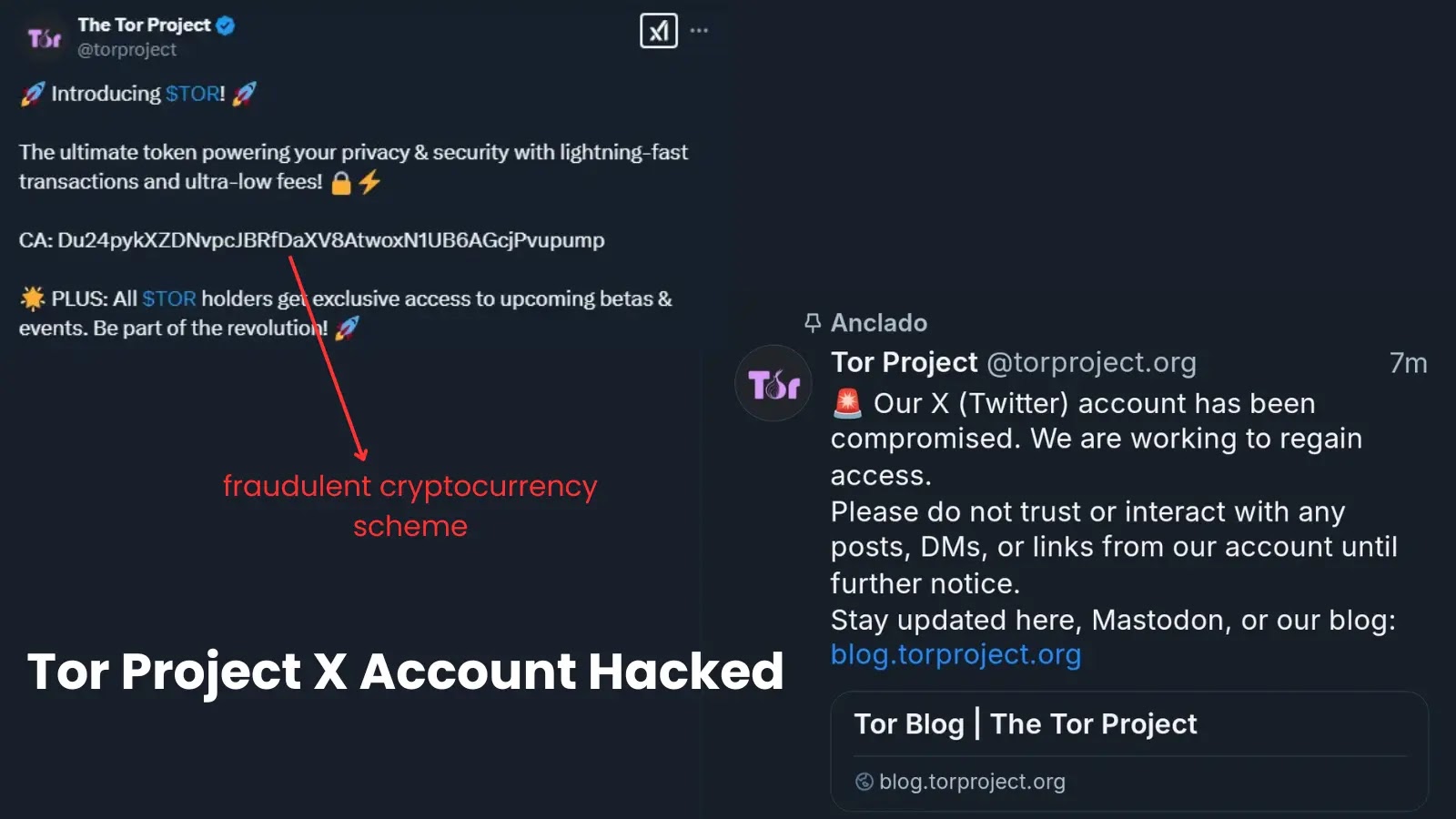

Tor Project X Account Hacked to Promote Cryptocurrency Scheme

The Tor Project’s X account was hacked on January 30, 2025, promoting a fraudulent cryptocurrency scheme. The organization warned followers to avoid interaction with the